C++ Programmer's Guide#

If you are new to pylon, Basler recommends making yourself familiar with the pylon C++ API first by reading the Getting Started section of the pylon C++ Programmer's Guide.

C++ Programming Samples#

To open the folder containing the programming samples for blaze cameras, press the Win key, go to the Basler folder, and choose blaze Samples. A File Explorer window opens. The C++ programming samples are located in the cpp folder.

The C++ samples are located in the /opt/pylon/share/pylon/Samples/blaze/cpp folder.

Prerequisites for Building the Samples#

- Visual Studio 2017 or above

- Optional: OpenCV library

- Optional: Point Cloud Library (PCL)

- Optional: Boost libraries

- CMake 3.3 or above

- Optional: Aravis

On an Ubuntu system, you can install the prerequisites by issuing the following command:

Info

There is no Debian package available for the Aravis library. Aravis must be built from sources instead.

How to Build the Samples#

信息

Before building the samples, copy the folder containing the samples to a location of your choice where you have read and write access.

CMake is used as build system for building the C++ samples.

Windows#

- Start Visual Studio.

- On the start page, choose the Open Folder or Open a local folder option, and navigate to the folder you copied the samples to.

- Open the cpp subfolder and click the Select Folder button.

Visual Studio runs CMake in the background. It will take a moment until you can build the samples by selecting Build All in the Build menu.

When you don't want to use Visual Studio's CMake integration, you can use the cmake-gui tool to generate Visual Studio solution and project files that you can open with Visual Studio.

If there is no CMake >= 3.3 installed, download a CMake Windows installer from the CMake website. Launch the .msi installer and follow the instructions.

To generate Visual Studio project and solution files for the blaze samples:

- Start cmake-gui.

- Click the Browse Source… button, and navigate to the folder you copied the samples to.

- Open the cpp folder by clicking the Select folder button.

- Specify a folder where the binaries will be built by clicking the Browse Build… button and choosing or creating a folder of your choice.

Basler advises against choosing the root folder of the source tree, i.e., the folder this document is located in. Common practice is to create a subfolder (e.g., namedbuild). - Click the Configure button.

A dialog opens. - Choose the desired Visual Studio version and platform.

Make sure that the selected platform (x86 or x64) matches the architecture that the OpenCV and PCL libraries are built for. - Click the Finish button to close the dialog.

The configuration procedure starts. - Once the configuration procedure has succeeded, click the Generate button to create the Visual Studio project and solution files.

The files will be generated in the build folder that you specified earlier. - Navigate to that build folder and open the .sln file.

Now, you can build the samples by selecting Build All in the Build menu.

Refer to the Troubleshooting topic if you experience problems building and running the samples.

Linux#

To build a sample, open a shell, navigate to the folder you copied the samples to. Then, change to the cpp subfolder and issue the following commands:

If CMake fails to find the pylon SDK, check whether the pylon SDK and the pylon Supplementary Package for blaze have been installed correctly.

If you installed the software in a location other than /opt/pylon, make sure that the PYLON_ROOT environment variable is set to the folder in which you installed pylon and the supplementary package.

示例:

If CMake fails to find the OpenCV library or the PCL, make sure that the development packages for the OpenCV library and PCL are installed.

Refer to the Troubleshooting topic if you experience problems building and running the samples.

Installing the OpenCV Library#

Windows#

You can download OpenCV from GitHub.

Make sure that you download a version of the OpenCV library that is compatible with the Visual Studio version you are going to use. If there is no suitable prebuilt version available, you have to build OpenCV from sources using the Visual Studio version you want to use for building your application.

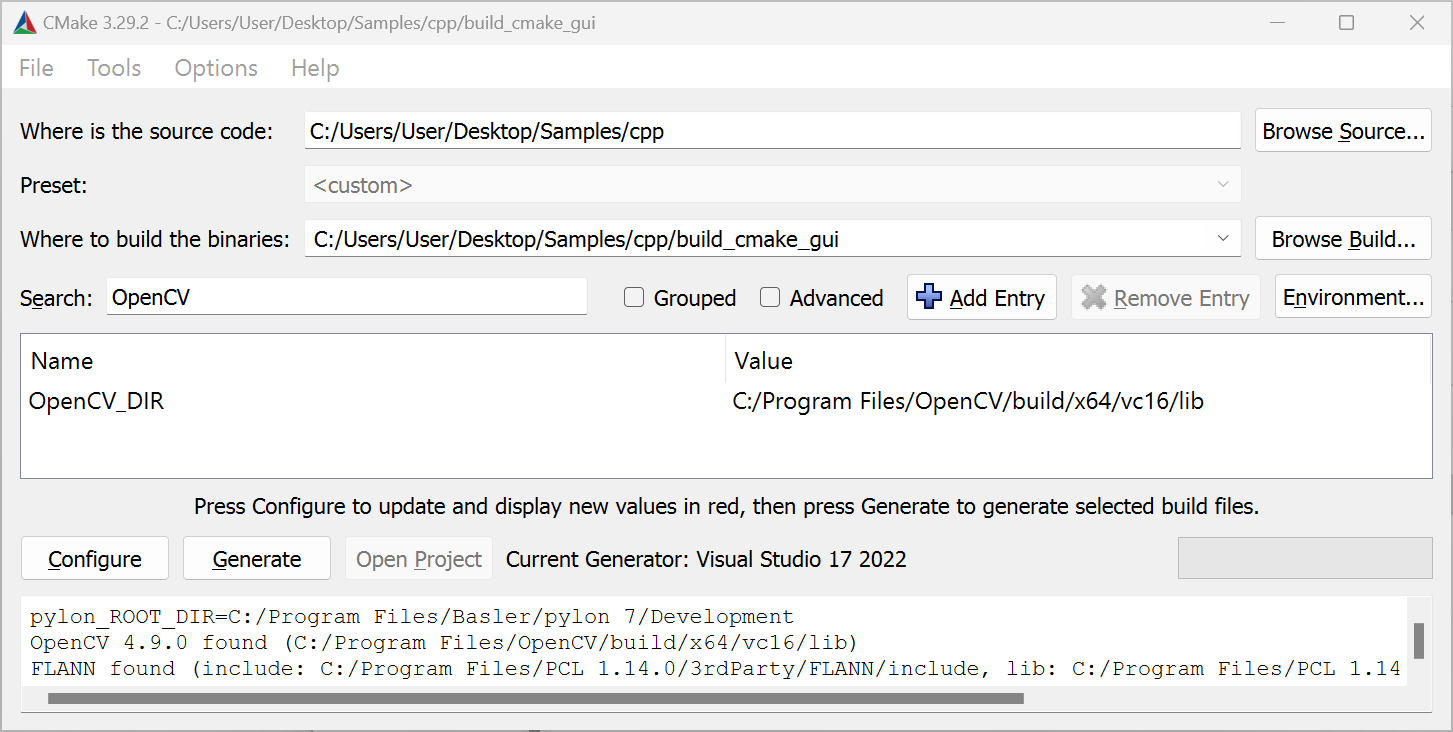

If CMake fails to find OpenCV, set the OpenCV_DIR variable to the folder containing the OpenCVConfig.cmake file.

示例

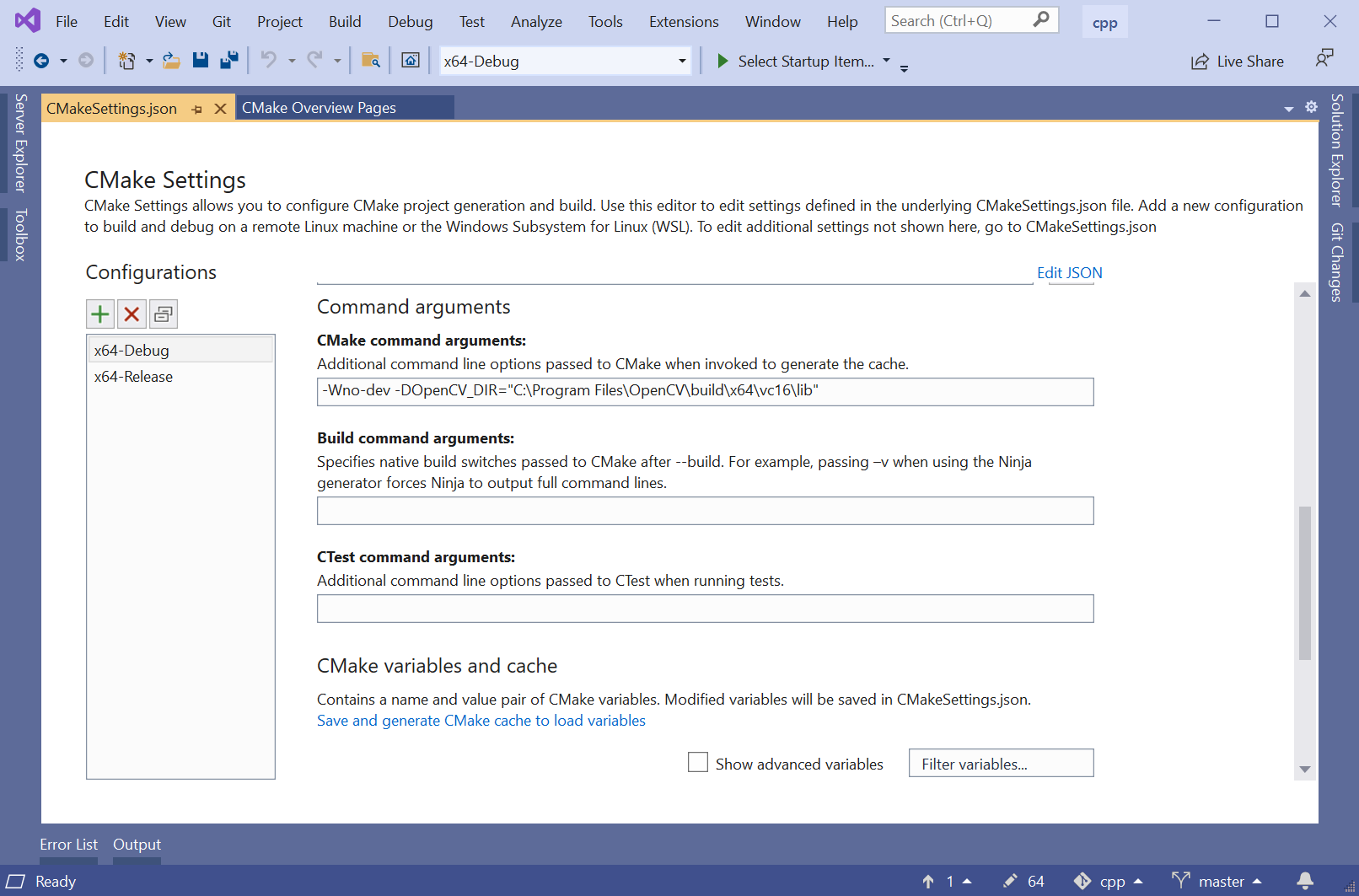

- Open the CMakeSettings.json file (CMake -> Change CMake Settings -> blazeCppSamples).

- Add the following to the cmakeCommandArgs list:

-DOpenCV_DIR=\"C:\\Program Files\\OpenCV\\build\\x64\\vc16\\lib\"

- Open the CMake Settings dialog (Project -> CMakeSettings for blazeCppSamples).

- Enter the following in the CMake command arguments field:

-DOpenCV_DIR="C:\Program Files\OpenCV\build\x64\vc16\lib" - Press Ctrl S to save the changes.

Before you can access the OpenCV_DIR in the CMake GUI, make sure that the Grouped check box is deselected in the CMake GUI.

Adding OpenCV to the PATH Environment Variable#

You have to add the OpenCV bin directory to the PATH environment variable.

- Press the Win key.

- Type "environment".

- Click Edit environment variables for your account.

- Click the Environment Variables… button.

- In the User variables area, select Path.

- Click the Edit button.

- Click New.

- Enter the path of your OpenCV installation, e.g., C:\Program Files\OpenCV\build\x64\vc16\bin.

- 单击确定。

Linux#

If CMake fails to find the OpenCV library, you either have to install the libopencv development package or to build the OpenCV library from sources.

On Ubuntu systems, the libopencv development package can be installed by issuing the following command:

When you build the OpenCV library from sources and install it to a non-standard location, the OpenCV_DIR CMake variable can be used to specify the location where the OpenCVConfig.cmake files is located.

示例:

Installing the Point Cloud Library#

Windows#

You can download the PCL All-in-one Installer for Windows from GitHub.

Make sure that you download a version of the PCL that is compatible with the Visual Studio version you are going to use.

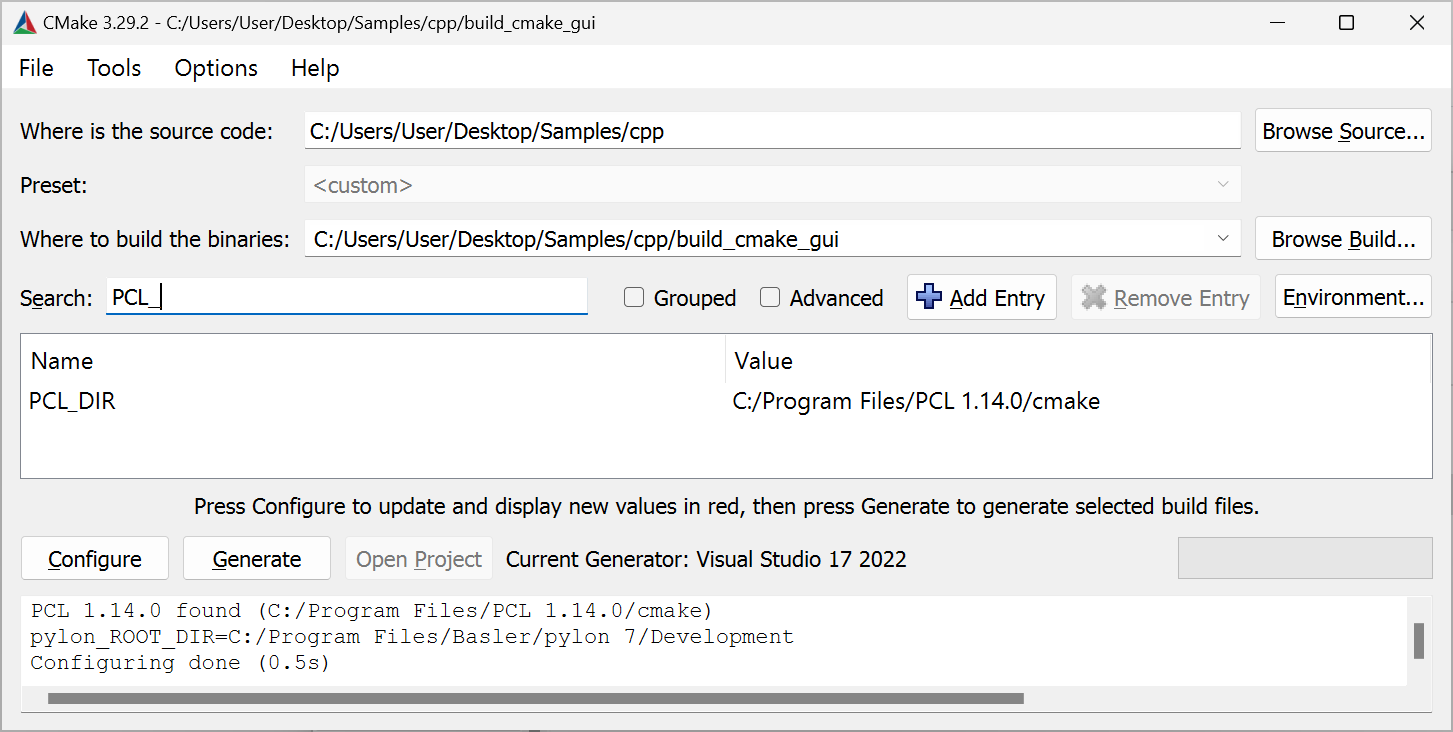

If CMake fails to find the PCL, set the PCL_DIR variable to the folder containing the PCLConfig.cmake file.

示例

- Open the CMakeSettings.json file (CMake -> Change CMake Settings -> blazeCppSamples)

- Add the following to the

cmakeCommandArgslist:-DPCL_DIR=\"C:\\Program Files\\PCL 1.9.1\\cmake\"

- Open the CMake Settings dialog (Project -> CMakeSettings for blazeCppSamples).

- Enter the following in the CMake command arguments field:

-DPCL_DIR="C:\Program Files\PCL 1.12.1\cmake" - Press Ctrl S to save the changes.

- Open the CMake Settings dialog (Project -> CMakeSettings for blazeCppSamples).

- Enter the following in the CMake command arguments field:

-DPCL_DIR="C:\Program Files\PCL 1.14.0\cmake" - Press Ctrl S to save the changes.

Before you can access the PCL_DIR in the CMake GUI, make sure that the Grouped check box is deselected in the CMake GUI.

When the PCL is installed using the PCL All-in-one Installer, the installer takes care of adding the PCL bin directory to the PATH environment variable. If you're using a different installation method, you have to add the bin directory to the PATH environment variable yourself.

The PCL All-in-one Installer installs the OpenNI2.dll into the %ProgramFiles%\OpenNI2\Redist folder. The DLL is required at runtime. Either add this folder to your PATH environment variable, or copy the OpenNI2.dll to the folder the PCL binaries are located, e.g., %ProgamFiles%\PCL 1.13.0\bin.

Linux#

If CMake fails to find the PCL library, you either have to install the libpcl development package or to build the PCL library from sources.

On Ubuntu systems, the libpcl development package can be installed by issuing the following command:

When you build the PCL from sources and install it to a non-standard location, the PCL_DIR CMake variable can be used to specify the location where the PCLConfig.cmake file is located.

示例:

Note

Samples using the Point Cloud Library (PCL) may crash depending on the versions of the PCL and VTK libraries installed on your system. The crash is due to a bug in the VTK libraries that are used by PCL for 3D visualization.

Source: https://github.com/PointCloudLibrary/pcl/issues/5237

Solution: PCL 1.13.1 contains fixes to work around the VTK bug.

Update to PCL 1.13.1 or higher. If required, uninstall the PCL libraries provided by your Linux distribution and build PCL version 1.13.1 or higher from sources (these can be retrieved from GitHub).

Installing the Boost Libraries#

Windows#

If you have built the Point Cloud Library (PCL) from sources or when you have installed it using the All-in-one installer as described above, the Boost libraries used for building the PCL will automatically be used for building the blaze samples as well.

If there is no PCL library available but you want to build the blaze samples requiring the Boost libraries, you must either build the Boost libraries from source or install them, e.g. by using pre-built binaries that you can download from sourceforge.net. Download the installer suitable for the Visual Studio version you are using and the architecture you are building your applications for.

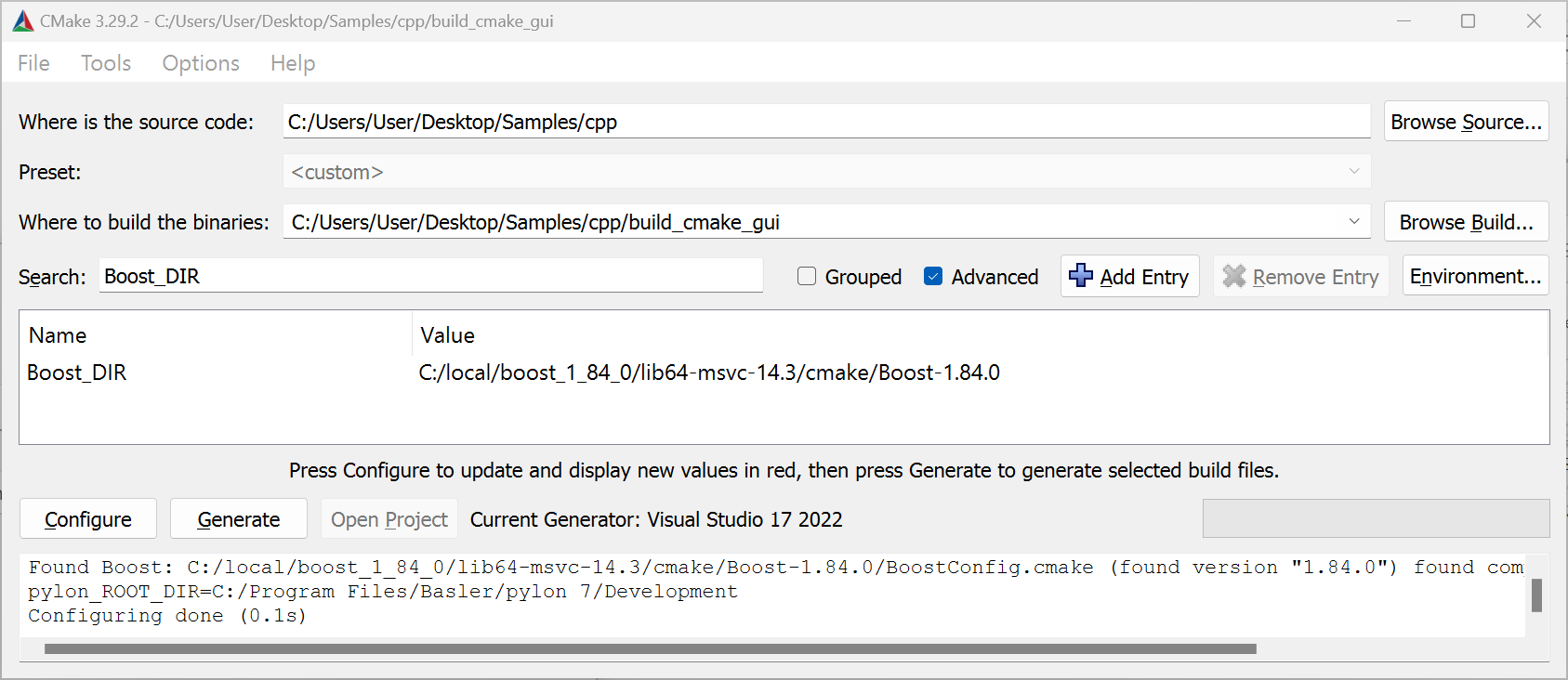

If CMake fails to detect the installed Boost libraries, you must set the Boost_DIR CMake variable. The variable must point to the directory containing the BoostConfig.cmake file.

示例

- Open the CMakeSettings.json file (CMake -> Change CMake Settings -> blazeCppSamples).

- Add the following to the

cmakeCommandArgslist:-DBoost_DIR=\"C:\\local\\boost_1_84_0\\lib64-msvc-14.1\\cmake\\Boost-1.84.0\"

- Open the CMake Settings dialog (Project -> CMakeSettings for blazeCppSamples).

- Enter the following in the CMake command arguments field:

-DBoost_DIR="C:\local\boost_1_84_0\lib64-msvc-14.2\cmake\Boost-1.84.0" - Press Ctrl S to save the changes.

- Open the CMake Settings dialog (Project -> CMakeSettings for blazeCppSamples).

- Enter the following in the CMake command arguments field:

-DBoost_DIR="C:\local\boost_1_84_0\lib64-msvc-14.3\cmake\Boost-1.84.0" - Press Ctrl S to save the changes.

Before you can access the Boost_DIR in the CMake GUI, make sure that the Advanced check box is selected and the Grouped check box is deselected in the CMake GUI.

Linux#

If CMake fails to find the Boost libraries, you either have to install the libboost development package or to build the Boost libraries from sources.

On Ubuntu systems, the libboost development package can be installed by issuing the following command:

List of Samples#

- FirstSample: Provides an introduction to operating a blaze camera and focuses on how to retrieve 3D point cloud data. Refer to the following samples of the pylon C++ sample suite to learn more about different ways to implement a grab loop using pylon:

- Grab_Strategies

- Grab_UsingBufferFactory

- Grab_UsingGrabLoopThread

- ParametrizeCamera: Illustrates how to access camera parameters.

- DeviceRemovalHandling: Illustrates how to detect and handle the removal of devices.

- GrabDepthMap: Illustrates how to grab and interpret depth maps that provide 3D information represented in a grayscale image.

- ConvertPointCloud2DepthMap: If you want to process 3D point clouds and depth maps at the same time, refer to the sample that illustrates how to extract depth maps from 3D point clouds. This sample also illustrates how to apply a rainbow color mapping to a depth map.

- ShowPointCloud_PCL: Illustrates how the Point Cloud Library (PCL) can be used to visualize the point clouds grabbed with a Basler blaze camera.

- ConvertAndFilter_PCL: Similar to ShowPointCloud. Illustrates how to apply a PCL filter to 3D point clouds.

- SavePointCloud: Illustrates the GrabSingleImage convenience method and how to save a point cloud to a .pcd text file.

- SaveAndLoadPointCloudUsingPCL: Illustrates how to more efficiently save point clouds to file using the PCL. The sample also shows how to load point clouds from file.

- FeatureDumper: Lists all camera parameters and their properties. Serves as an advanced example for how to use GenApi.

- DistortionCorrection: Illustrates how to disable distortion correction in order to use your own calibration data.

- ExtrinsicTransformation: Illustrates how to detect a main plane in the point cloud and how to calculate an extrinsic transformation and pass it on to the camera.

- MultiCam/ColorAndDepth/ColorAndDepthPtp: Illustrates how to grab images from both a Basler blaze and a Basler GigE color camera and how to access the image and depth data. The cameras are synchronized using PTP and the Synchronous Free Run feature.

- MultiCam/ColorAndDepth/Calibration: Illustrates how to calibrate a system consisting of a Basler GigE color camera and a Basler blaze camera.

- MultiCam/ColorAndDepth/ColorAndDepthFusion: Illustrates how the data from a Basler GigE color camera and a Basler blaze can be fused into a colored point cloud.

- MultiCam/ColorAndDepth/RgbdCamera: Similar to ColorAndDepthFusion, this sample illustrates how the data from a Basler blaze and a Basler GigE color camera can be fused into a clored point cloud. The sample utilizes the

RgbdCameraclass allowing to combine a 2D color camera and a blaze and interface the two cameras like a single RGB-D camera. The two combined cameras are synchronously triggered using PTP (IEE1588) and the Synchronous Free Run feature. The source code of theRgbdCameraclass is located in the same folder. To use the RgbdCamera class in your own application add the RgbdCamera.h and RgbdCamera.cpp files to your project. In addition you have to add the .h and .cpp files from the Multicam/MultiCamHelper folder as well. - MultiCam/DepthFusion/Calibration: Illustrates how to calibrate a system consisting of multiple Basler blaze cameras.

- MultiCam/DepthFusion/DepthFusion: Illustrates how to merges the depth data to display a fused point cloud from any number of cameras.

- MultiCam/MultiChannel/MultiChannelFreeRun: Illustrates how to operate several blaze cameras simultaneously without their light sources interfering with each other. The cameras are operated in free run mode.

- MultiCam/MultiChannel/MultiChannelSwTrigger: Illustrates how to operate several blaze cameras simultaneously without their light sources interfering with each other. The cameras are triggered using software triggers.

- MultiCam/SynchronousFreeRun/SynchronousFreeRun: Illustrates how to use PTP clock synchronization and the Synchronous Free Run feature to operate several blaze cameras simultaneously without their light sources interfering with each other.

- MultiCam/MultiCamHelper: A library providing convenience classes making it easy to synchronize the clocks of multiple cameras using PTP (IEEE1588) and to synchronously grab images from multiple cameras. The library is used by all samples using the Synchronous Free Run feature to acquire images from multiple cameras. To add the library to your project, simply add the .h and .cpp files from this folder to your project.

- Aravis/BasicSample: (Linux only) Illustrates how to configure and acquire data from blaze cameras using the open-sourceAravis library.

- Aravis/PointCloudSample: (Linux only) Illustrates how to calculate 3D coordinates (i.e., point clouds) from data acquired using the Aravis library. The sample also illustrates how to save and visualize a point cloud using OpenCV.

How to Build Applications#

Windows#

Using Visual Studio Solutions#

Refer to the Common Settings for Building Applications with pylon section of the pylon C++ Programmer's Guide to learn how to set up a project for building applications using pylon.

Refer to the Common Settings for Building Applications with pylon section of the pylon C++ Programmer's Guide to learn how to set up a project for building applications using pylon.

Using CMake#

The pylon Supplementary Package for blaze includes a Findpylon.cmake module that is used to determine the installation location of pylon and the pylon Supplementary Package for blaze. The find module exports the pylon::pylon CMake target. Pass this target as argument to target_link_libraries, and CMake will take care of setting the include path, library path, etc.

A basic CMakeLists.txt CMake file could look like this:

project (hello)

# Locate the CMake find module for pylon ...

# for Unix system. All other OS PYLON_ROOT will be empty

list(APPEND CMAKE_PREFIX_PATH $ENV{PYLON_ROOT})

# for Windows system. All other OS PYLON_DEV_DIR will be empty

list(APPEND CMAKE_PREFIX_PATH $ENV{PYLON_DEV_DIR})

find_package(pylon 7.1 REQUIRED)

add_executable(hello helloworld.cpp)

target_link_libraries(hello pylon::pylon)

project (hello)

# Locate the CMake find module for pylon ...

find_path(pylon_cmake Findpylon.cmake

HINTS "$ENV{PYLON_DEV_DIR}/lib/cmake"

"$ENV{ProgramFiles}/Basler/pylon 7/Development/lib/cmake"

"$ENV{ProgramFiles}/Basler/pylon 6/Development/lib/cmake"

)

# ... and extend the CMake module path accordingly.

list(APPEND CMAKE_MODULE_PATH ${pylon_cmake})

# Locate pylon.

find_package(pylon 6.2 REQUIRED)

add_executable(hello helloworld.cpp)

target_link_libraries(hello pylon::pylon)

Linux#

Using CMake#

project (hello)

# Locate the CMake find module for pylon ...

# for Unix system. All other OS PYLON_ROOT will be empty

list(APPEND CMAKE_PREFIX_PATH $ENV{PYLON_ROOT})

# for Windows system. All other OS PYLON_DEV_DIR will be empty

list(APPEND CMAKE_PREFIX_PATH $ENV{PYLON_DEV_DIR})

find_package(pylon 7.1 REQUIRED)

add_executable(hello helloworld.cpp)

target_link_libraries(hello pylon::pylon)

project (hello)

# Locate the CMake find module for pylon ...

find_path(pylon_cmake Findpylon.cmake

HINTS "/opt/pylon/lib/cmake"

)

# ... and extend the CMake module path accordingly.

list(APPEND CMAKE_MODULE_PATH ${pylon_cmake})

# Locate pylon.

find_package(pylon 6.2 REQUIRED)

add_executable(hello helloworld.cpp)

target_link_libraries(hello pylon::pylon)

Using Make Files#

Refer to the Common Settings for Building Applications with pylon section of the pylon C++ Programmer's Guide to learn how to adjust compiler and linker settings for building applications with pylon.

Instant Camera Class for blaze Cameras#

pylon provides different types of classes for accessing camera devices. Apart from low-level IPylon devices, there are the so-called Instant Camera classes that provide a convenient high-level API for cameras.

The Pylon::CInstantCamera class provides a generic access to all Basler cameras, including blaze cameras.

The Pylon::CBaslerUniversalInstantCamera class extends the generic Pylon::CInstantCamera class by parameter accessor objects that make it easy to set and get camera parameters using IntelliSense/code completion.

The Pylon::CBaslerUniversalInstantCamera class doesn't provide parameter accessors for every blaze camera feature. Therefore, the pylon Supplementary Package for blaze includes the Pylon::CBlazeInstantCamera class. Basler recommends using that class with Basler blaze cameras instead.

If you choose the CBaslerUniversalInstantCamera class instead of the CBlazeInstantCamera class, you still can set and get all camera parameters provided by the blaze camera. If there is no accessor object provided by the CBaslerUniversalInstantCamera class, use the so-called generic parameter access for accessing parameters by their names as explained in the Generic Parameter Access section of the pylon C++ Programmer's Guide.

示例:

using namespace Pylon;

GenApi::INodeMap& nodemap = camera.GetNodeMap();

CIntegerParameter filterStrength(nodemap, "FilterStrength" );

std::cout << "Filter strength: " << filterStrength.GetValue() << std::endl;

Opening and Accessing blaze Cameras#

It takes three steps to create and open a blaze camera object:

- Create a camera object for the camera you want to access.

Usually, you should create an instance of thePylon::CBlazeInstantCameraclass. - Register the blaze-specific

Pylon::CBlazeDefaultConfigurationconfiguration event handler that applies a default configuration.

Refer to the Registering Camera Configuration Handlers section for more details about the blaze configuration event handler classes. - Call the

Open()method to establish a connection to the camera device.

The following example illustrates the three steps:

#include <pylon/PylonIncludes.h>

using namespace Pylon;

// ....

// Before using any pylon methods, the pylon runtime must be initialized.

PylonInitialize();

try {

// 1: Create a camera object for the first available blaze camera.

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateFirstDevice(

CDeviceInfo().SetDeviceClass(BaslerGenTlBlazeDeviceClass))

);

// 2. Register the default configuration.

camera.RegisterConfiguration(

new CBlazeDefaultConfiguration,

RegistrationMode_ReplaceAll,

Cleanup_Delete);

// 3. Open the camera, i.e., establish a connection to the camera device.

camera.Open();

// Use the camera.

std::cout << "Connected to camera "

<< camera.GetDeviceInfo().GetFriendlyName() << std::endl;

// .....

// Close the connection.

camera.Close();

} catch (const GenICam::GenericException& e) {

std::cerr << "Exception occurred: " << std::endl

<< e.GetDescription() << std::endl;

}

// Releases all pylon resources.

PylonTerminate();

In case of errors, exceptions inheriting from GenICam::GenericException are thrown.

Typical error conditions:

- No blaze camera found: The

CreateFirstDevice()function throws an exception. - Camera can't be opened, e.g., because it is already opened by another application like the blaze Viewer: The

Open()method throws an exception.

The following subsections provide more detailed information about how to create and open a camera object.

Creating a Camera Object for the First Available blaze Camera#

Use the Pylon::CTlFactory::CreateFristDevice() method for creating a camera object for the first available camera. You should always specify the pylon device class identifier for blaze cameras to prevent CreateFirstDevice() from creating a camera class for a different type of camera that might be attached to your system or reachable via the network:

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateFirstDevice(

CDeviceInfo().SetDeviceClass(BaslerGenTlBlazeDeviceClass)));

);

If no blaze camera is found, check the camera's and your network adapter's IP configuration settings. Refer to the Network Configuration topic for more information about setting up your network.

Creating a Camera Object for a Specific blaze Camera#

The Creating Specific Cameras section in the Advanced Topics topic of the pylon C++ Programmer's Guide provides information about selecting a specific camera.

If you want to open a specific blaze camera, e.g., by specifying its serial number, in addition to passing the pylon device class identifier for blaze cameras to CreateFirstDevice(), you can specify further properties.

The following code snippet illustrates how to open a blaze camera by serial number.

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateFirstDevice(

CDeviceInfo()

.SetDeviceClass(BaslerGenTlBlazeDeviceClass)

.SetSerialNumber("23298299")

));

To open a blaze camera by user-defined name, use the following lines:

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateFirstDevice(

CDeviceInfo()

.SetDeviceClass(BaslerGenTlBlazeDeviceClass)

.SetUserDefinedName("MyCamera")));

User-Defined Names

You can assign a user-defined name to a camera using the DeviceUserID parameter. You can do this in the blaze Viewer, the pylon IP Configurator, or the pylon API:

Creating a Device by Its IP Address

Currently, you can't specify the camera IP address using the CreateFirstDevice() function. The reason for this is that currently the Pylon::CDeviceInfo() class doesn't contain any information about the camera's IP address.

If you want to create and open a camera with a specific IP address, you first have to enumerate all cameras, iterate the returned list, and open each camera before you can query the IP address and other network-related information. This procedure is shown in the Enumerate blaze Cameras section.

If the desired blaze camera is not found, check whether you're using the right values for the properties and check your camera's and your network adapter's IP configuration settings. Refer to the Network Configuration topic for more information about setting up your network.

Enumerating blaze Cameras#

The following code snippet illustrates how to retrieve a list of all connected blaze cameras. For each element of the list, the camera is opened and some camera parameter values are printed.

#include <pylon/PylonIncludes.h>

#include <ostream>

using namespace Pylon;

// ....

// Before using any pylon methods, the pylon runtime must be initialized.

PylonInitialize();

// ....

// Enumerate all blaze cameras.

CTlFactory& TlFactory = CTlFactory::GetInstance();

DeviceInfoList_t lstDevices;

DeviceInfoList_t filter;

filter.push_back(CDeviceInfo().SetDeviceClass(BaslerGenTlBlazeDeviceClass));

TlFactory.EnumerateDevices(lstDevices, filter);

if (!lstDevices.empty()) {

DeviceInfoList_t::const_iterator it;

for (it = lstDevices.begin(); it != lstDevices.end(); ++it) {

std::cout << it->GetFullName();

// Open camera, i.e., establish a connection to the camera device.

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateDevice(*it));

camera.RegisterConfiguration(

new CBlazeDefaultConfiguration,

RegistrationMode_ReplaceAll, Cleanup_Delete);

camera.Open();

// Print out some information.

std::cout << "Connected to camera "

<< camera.GetDeviceInfo().GetFriendlyName() << std::endl;

std::cout << "IP Address: "

<< camera.GevCurrentIPAddress.GetValue() << std::endl;

std::cout << "User-defined name: "

<< camera.DeviceUserID.GetValue() << std::endl;

std::cout << "Serial Number: "

<< camera.DeviceSerialNumber.GetValue() << std::endl;

} // The connection will be closed automatically when leaving the scope here.

else {

std::cerr << "No devices found!" << std::endl;

}

Registering Camera Configuration Handlers#

pylon Instant Camera classes allow you to register configuration event handler objects that can be used to apply camera settings on certain events, for example when the Open() method is called.

The pylon Supplementary Package for blaze includes the Pylon::CBlazeDefaultConfiguration class. Basler recommends always registering this configuration for each instance of the CBlazeInstantCamera class you create.

camera.RegisterConfiguration(

new CBlazeDefaultConfiguration,

RegistrationMode_ReplaceAll, Cleanup_Delete);

The CBlazeDefaultConfiguration::OnOpened() method configures the data stream to contain depth, intensity, and confidence information. Depth information is sent as point clouds. A point cloud contains 3D coordinates for each sensor pixel. The CBlazeDefaultConfiguration::OnOpened() method also puts the camera into the so-called free run mode. In free run mode, the camera continuously streams data without requiring software or hardware triggers after the acquisition has been started.

If the default configuration is registered, the CBlazeDefaultConfiguration::OnOpened() method gets called automatically by CBlazeInstantCamera::Open().

The CBlazeDefaultConfiguration class is a header-only class. You can inspect the code by opening the BlazeDefaultConfiguration.h file that is located in the includes folder of the pylon SDK:

- Windows:

%Program Files%\pylon\Development\include\pylon - Linux:

/opt/pylon/include/pylon

You can register multiple configuration event handler classes.

The following example illustrates how to register a configuration event handler that applies additional settings:

class MyConfigurationHandler : public CBlazeConfigurationEventHandler

{

public:

// Sets exposure time to minimum possible value.

void OnOpened(CBlazeInstantCamera& camera) override

{

camera.ExposureTime.SetToMinimum();

}

};

// ....

// Register the default configuration first.

camera.RegisterConfiguration(

new CBlazeDefaultConfiguration,

RegistrationMode_ReplaceAll, Cleanup_Delete);

// Then, register additional configuration event handlers.

camera.RegisterConfiguration(

new MyConfigurationHandler,

RegistrationMode_Append, Cleanup_Delete);

// ....

camera.Open(); // Configurations will be applied.

Refer to the Instant Camera Event Handler Basics section of the pylon C++ Programmer's Guide for more information about pylon camera event handler classes.

You don't have to apply your desired settings by providing a configuration event handler. Instead, you can set the parameters you want to change at any time after calling the CBlazeInstantCamera::Open() method.

Refer to the Accessing Parameters section for information how to set and get camera parameter values.

Connecting to a Camera#

After creating a camera object and registering a configuration event handler, the connection to the camera is established by calling the CBlazeInstantCamera::Open() method.

The connection is kept alive until the CBlazeInstantCamera::Close() method is called. The destructor of CBlazeInstantCamera automatically calls Close() if it hasn't been called explicitly.

The camera closes the connection if it doesn't periodically receive heartbeat requests from the camera. Missing heartbeat requests occur if the application crashes or is interrupted by a debugger. Refer to the Debugging Applications and Controlling the GigE Vision Heartbeat section for more details.

Accessing Parameters#

Refer to the Accessing Parameters section of the pylon C++ Programmer's Guide to get familiar with how to access camera parameters using the pylon API.

The pylon C++ samples Parametrize_NativeParameterAccess and Parametrize_GenericParameterAccess illustrate typical methods for accessing camera parameters using pylon.

The following code sample illustrates how to set and get frequently used blaze-specific camera parameters using the CBlazeInstantCamera class.

// Include files to use the pylon API

#include <pylon/PylonIncludes.h>

#include <pylon/BlazeInstantCamera.h>

// Namespaces for using the pylon API and the blaze camera parameters

using namespace Pylon;

using namespace BlazeCameraParams_Params;

// ...

// Set the operating mode of the camera. The choice you make here

// affects the working range of the camera, i.e., the Minimum Working

// Range and Maximum Working Range parameters.

OperatingModeEnums oldOperatingMode = camera.OperatingMode.GetValue();

camera.OperatingMode.SetValue(OperatingMode_LongRange);

// Exposure time of the camera. If the operating mode is changed, the

// exposure time is set to the recommended default value.

camera.ExposureTime.SetValue(750); // us

// Enable and configure image filtering.

// The spatial noise filter uses the values of neighboring pixels to

// filter out noise in an image.

camera.SpatialFilter.SetValue(true);

// The temporal noise filter uses the values of the same pixel at

// different points in time to filter out noise in an image.

camera.TemporalFilter.SetValue(true);

camera.TemporalFilterStrength.SetValue(220);

// The outlier removal removes pixels that differ significantly from

// their local environment.

camera.OutlierRemoval.SetValue(true);

// Some properties have restrictions.

// We use API functions that automatically perform value corrections.

// Alternatively, you can use GetInc() / GetMin() / GetMax() to make sure you

// set a valid value.

camera.ConfidenceThreshold.SetValue(321, IntegerValueCorrection_Nearest);

// Not all functions are available in older cameras.

// Therefore, we must use "Try" functions that only perform the action

// when parameters are writable. Otherwise, we would get an exception.

camera.MultiCameraChannel.TrySetValue(1);

std::cout << "Operating Mode : "

<< camera.OperatingMode.GetValue() << std::endl;

std::cout << "Exposure Time : "

<< camera.ExposureTime.GetValue() << std::endl;

std::cout << "Spatial Filter : "

<< camera.SpatialFilter.GetValue() << std::endl;

std::cout << "Temporal Filter : "

<< camera.TemporalFilter.GetValue() << std::endl;

std::cout << "Temporal Filter Strength : "

<< camera.TemporalFilterStrength.GetValue() << std::endl;

std::cout << "Outlier Removal : "

<< camera.OutlierRemoval.GetValue() << std::endl;

std::cout << "Confidence Threshold : "

<< camera.ConfidenceThreshold.GetValue() << std::endl;

if (camera.MultiCameraChannel.IsReadable())

std::cout << "Multi-Camera Channel : "

<< camera.MultiCameraChannel.GetValue() << std::endl;

// Restore the old operating mode.

camera.OperatingMode.SetValue(oldOperatingMode);

// Close the camera.

camera.Close();

The blaze Viewer provides a Documentation pane. When you select a camera parameter in the Features pane, the Documentation pane displays information about the selected parameter as well as C++ and C# code snippets that illustrate how to get and set the value of that camera parameter.

Acquiring Data#

Refer to the Grabbing Images section of the pylon C++ Programmer's Guide to get familiar with how to acquire data using the pylon API.

pylon represents grabbed images as data structures called GrabResults. GrabResults acquired by a 2D camera contain a single gray-value or color image.

GrabResults grabbed by blaze cameras contain multiple components. By default, each GrabResult stores an intensity image, a confidence map, and depth information.

Depending on the pixel format set for the Range component, depth data can be represented as 2D depth maps or 3D point clouds. By default, depth data is represented as point clouds.

To enable depth maps, set the pixel format for the Range component to PixelFormat_Coord3D_16C:

// Enable depth maps by enabling the Range component and setting the

// appropriate pixel format.

camera.ComponentSelector.SetValue(ComponentSelector_Range);

camera.ComponentEnable.SetValue(true);

camera.PixelFormat.SetValue(PixelFormat_Coord3D_C16);

To enable point clouds, set the pixel format for the Range component to PixelFormat_Coord3D_ABC32f:

// Enable point clouds by enabling the Range component and setting the

// appropriate pixel format.

camera.ComponentSelector.SetValue(ComponentSelector_Range);

camera.ComponentEnable.SetValue(true);

camera.PixelFormat.SetValue(PixelFormat_Coord3D_ABC32f);

Refer to the Component Selector and Pixel Format topics for more details.

信息

It isn't possible to let the camera send depth information as point clouds and depth maps at the same time.

The Processing Measurement Results topic explains how to calculate a point cloud from a depth map.

This conversion is also shown in the GrabDepthMap C++ programming sample for blaze cameras.

How to retrieve a depth map from a point cloud is shown in the ConvertPointCloud2DepthMap C++ programming sample for blaze cameras.

Grab Loop#

pylon supports different approaches for setting up a grab loop and provides different strategies for handling memory buffers.

Refer to the Grabbing Images section of the pylon C++ Programmer's Guide for more details.

The following code snippet illustrates a typical grab loop:

#include <pylon/PylonIncludes.h>

#include <pylon/BlazeInstantCamera.h>

using namespace Pylon;

using namespace BlazeCameraParams_Params;

// ....

PylonInitialize();

CBlazeInstantCamera camera(CTlFactory::GetInstance().CreateFirstDevice(

CDeviceInfo().SetDeviceClass(BaslerGenTlBlazeDeviceClass)));

camera.RegisterConfiguration(

new CBlazeDefaultConfiguration, RegistrationMode_ReplaceAll, Cleanup_Delete);

camera.Open();

size_t nBuffersGrabbed= 0;

// This smart pointer will receive the grab result data.

CGrabResultPtr ptrGrabResult;

camera.StartGrabbing();

while (camera.IsGrabbing() && nBuffersGrabbed < 10) {

// Wait for an image and then retrieve it. A timeout of 1000 ms is used.

camera.RetrieveResult(1000, ptrGrabResult, TimeoutHandling_ThrowException);

// Data grabbed successfully?

if (ptrGrabResult->GrabSucceeded()) {

nBuffersGrabbed++;

// Access the data.

// ....

} else {

// Error handling

std::cerr << "Grab error occurred: " << ptrGrabResult->GetErrorDescription() << std::endl;

}

}

// Clean-up

camera.StopGrabbing();

camera.Close();

Accessing Components#

To access the individual components of a GrabResult, you can use the Pylon::CPylonDataContainer and Pylon::CPylonDataComponent classes. A container can hold one or more components. You can use the container to query for the number of components and to retrieve a specific component. Each component in the container holds the actual data, e.g, the depth values, as well as its metadata.

Use the Pylon::CGrabResultData::GetDataContainer() method to get access to a GrabResult's CPylonDataContainer. Use the Pylon::CPylonDataContainer::GetDataComponent() method to access a component by specifying the index of the component.

Refer to the Multi-Component Grab Results section in the Advanced Topics topic of the pylon C++ Programmer's Guide for more information about how pylon provides access to GrabResults containing multiple components.

示例:

#pragma pack(push, 1)

struct Point

{

float x;

float y;

float z;

};

#pragma pack(pop)

// ...

// By registering the CBlazeDefaultConfiguration, depth, intensity, and confidence data will be delivered.

// Depth data is represented as point clouds.

camera.RegisterConfiguration(new CBlazeDefaultConfiguration, RegistrationMode_ReplaceAll, Cleanup_Delete);

camera.Open();

//....

if (ptrGrabResult->GrabSucceeded())

{

// Get access to the container.

auto container = ptrGrabResult->GetDataContainer();

// Access the container's components.

// Iterate through all components in the container.

for (size_t i = 0; i < container.GetDataComponentCount(); ++i)

{

// Get one component from the container.

const Pylon::CPylonDataComponent component = container.GetDataComponent(i);

if ( !component.IsValid() )

continue;

// Is this the intensity component?

if ( component.GetComponentType() == Pylon::ComponentType_Intensity )

{

const Pylon::EPixelType pixelType = component.GetPixelType();

// Get a pointer to the pixel data.

const void* pPixels = component.GetData();

// Process intensity values here (pixel data).

// [...]

}

}

auto rangeComponent = container.GetDataComponent(0);

auto intensityComponent = container.GetDataComponent(1);

auto confidenceComponent = container.GetDataComponent(2);

// Retrieve the 3D coordinates corresponding to the center pixel.

const auto width = rangeComponent.GetWidth();

const auto height = rangeComponent.GetHeight();

const uint32_t u = (int)(0.5 * width);

const uint32_t v = (int)(0.5 * height);

auto pPoint =

reinterpret_cast<const Point*>(rangeComponent.GetData()) + u + v * width;

uint16_t* pIntensity =

(uint16_t*)intensityComponent.GetData() + v * width + u;

uint16_t* pConfidence

= (uint16_t*)confidenceComponent.GetData() + v * width + u;

if (pPoint->z != 0)

std::cout << "x=" << pPoint->x

<< " y=" << pPoint->y

<< " z=" << pPoint->z << "\n";

else

std::cout << "x= n/a y= n/a z= n/a\n";

std::cout << " intensity=" << *pIntensity

<< " confidence=" << *pConfidence << "\n";

}

else

{

// Error handling

std::cerr << "Grab error occurred: " << ptrGrabResult->GetErrorDescription() << std::endl;

}

Using the ComponentSelector and ComponentEnable camera parameters, you can enable/disable individual components. For blaze cameras, the order of the components in a container is always like this:

- 范围

- 强度

- 置信度

For example, if Range and Confidence components are enabled and the Intensity component is disabled, container.GetDataComponent(0) will retrieve the Range component and container.GetDataComponent(1) will return the Confidence component. Trying to access container.GetDataComponent(2) will result in an error since by disabling the Intensity component the container only has two components.

If the part of your application that processes grab results doesn't know in advance which components to expect, you should choose a more generic way of accessing the components by iterating through the container and checking each component's type by using the CPylonDataComponent::GetComponentType() method.

示例:

camera.RetrieveResult(1000, ptrGrabResult, TimeoutHandling_ThrowException);

// Data grabbed successfully?

if (ptrGrabResult->GrabSucceeded()) {

// Access the data.

auto container = ptrGrabResult->GetDataContainer();

CPylonDataComponent rangeComponent;

CPylonDataComponent intensityComponent;

CPylonDataComponent confidenceComponent;

// Iterate through all components in the container.

for (size_t i = 0; i < container.GetDataComponentCount(); ++i) {

const Pylon::CPylonDataComponent component = container.GetDataComponent(i);

if (!component.IsValid())

continue;

switch (component.GetComponentType())

{

case ComponentType_Intensity:

intensityComponent = component;

break;

case ComponentType_Confidence:

confidenceComponent = component;

break;

case ComponentType_Range:

rangeComponent = component;

break;

}

}

if (rangeComponent.IsValid()) {

const auto width = rangeComponent.GetWidth();

const auto height = rangeComponent.GetHeight();

// Process depth data.

}

if (intensityComponent.IsValid()) {

// Process intensity image.

}

if (confidenceComponent.IsValid())

{

// Process confidence data.

}

}

Interpreting Depth Data#

The Processing Measurement Results topic provides information about the format of the depth data stored in the Range component.

Debugging Applications and Controlling the GigE Vision Heartbeat#

GigE Vision cameras like the blaze cameras require the application to periodically access the camera to allow the camera to check whether the application controlling the camera is still running. Therefore, the transport layer periodically sends network requests to the camera (heartbeats). If a camera doesn't receive these heartbeats within a period of time specified by the camera's heartbeat timeout settings, it considers the connection to be broken and closes the connection, i.e., it won't accept any commands from the application anymore. The default value for the heartbeat timeout is 3000 ms.

When you're debugging your application and are hitting a breakpoint, the debugger will suspend all threads including the one sending the heartbeats. To prevent the camera from closing the connection while an application is suspended or when single-stepping through your code, the transport layer automatically increases the heartbeat timeout (to 1 hour if you're using pylon 7.1 or above, to 5 minutes otherwise). If you're using pylon 7.1 or above, the connection to the camera is automatically closed when the application is terminated or crashes.

Reconnection Problems When Using pylon 7.0 or Below

If an application is terminated or crashes before it properly closed the connection to the camera, the camera keeps the connection open until the heartbeat timeout expires. Until the heartbeat timeout has expired, the camera refuses any further connection attempt. When restarting your application or starting another application requesting access to the camera, you will receive an error stating that the device is currently in use.

This usually happens if you stop your application using the debugger or if your application terminates unexpectedly. To open the camera again, you must either wait until the timeout has elapsed or temporarily disconnect the camera from the network.

To work around this, you can override the automatic adjustment of the heartbeat timeout when the application is running under the control of a debugger by setting the environment variable named GEV_HEARTBEAT_TIMEOUT to the desired timeout in milliseconds. Alternatively, you can set the heartbeat timeout in your application using the pylon API as shown in the DeviceRemovalHandling sample program.